The Traveling Salesperson Problem

The Traveling Salesperson Problem, aka TSP, is a classic problem in Computer Science. In it, the traveler wants to visit all the cities on their route and travel the least amount of distance. To quote Wikipedia,

It is an NP-hard problem in combinatorial optimization, important in theoretical computer science and operations research.

The important part to note here is “NP-hard,” which means “If P ≠ NP, then NP-hard problems cannot be solved in polynomial time.“

And Britanica says “This is an example of an NP-complete problem (from nonpolynomial), for which no known efficient (i.e., polynomial time) algorithm exists.“

NP-hard and NP-Complete are classes of Computer Science problems that have been studied for decades. I find it really curious that Wikipedia and Britanica disagree an the classification of this classic problem. NP-Hard and NP-Complete are not the same.

I barely understood this when I learned it at MIT so its really ok if you’re not getting it now. So what does this all mean?

It means that we can’t solve the problem. But can we? I remember years ago when Susan was heading out to work to visit some clients. She had a small number to visit that morning. Over breakfast she told me that she had put their addresses into her cheap Garmin GPS and told it to compute an optimal route. I told her that that wasn’t possible. She told me she had just done so and wondered why she was with someone so stupid.

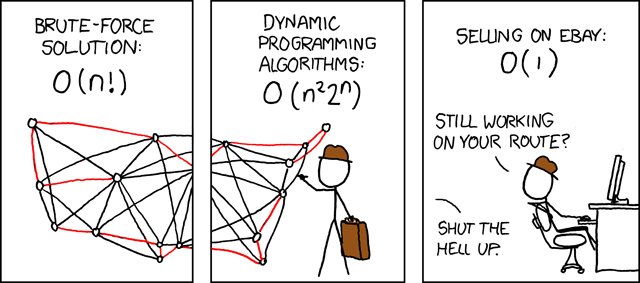

The xkcd cartoon above actually a most concise explanation. Lets first go over what O(n!) and O(n22n) mean. O() is known as Big O Notation. It is very important in Computer Science and, frankly, not very important outside of it. And that’s a shame, because the knowing the difference between very hard and extremely hard and impossibly stupendously hard is difficult to express in English and pretty easy to express with math. And the TSP is lies between very hard and impossibly stupendously hard.

Karuna Sehgal’s blog, A Simplified Explanation of the Big O Notation is worth reading if you want some details, but this quote is all we need here.

With Big O Notation we express the runtime in terms of — how quickly it grows relative to the input, as the input gets larger.

So O(n!) reads as “on the order of n factorial” and O(n22n) means on the order of n-squared times 2 to th n-th. What do those mean? Let’s play with some numbers. Say we have 5 cities to visit and it takes our computer 1 second to process a city pairs. A city pair is just the distance between two cities. Here’s a chart showing all the distances between each of the 5 cities, it shows the city pairs.

| Columbus | Charlotte | Nashville | Cincinnati | Indianapolis | |

| Columbus | 0 | ||||

| Charlotte | 426 | 0 | |||

| Nashville | 443 | 409 | 0 | ||

| Cincinnati | 107 | 462 | 273 | 0 | |

| Indianapolis | 176 | 112 | 289 | 112 | 0 |

This was pretty tedious to generate, it will be last one I do by hand.

With n=5, O(n!) is O(120), and O(n22n) is O(800). Remember, our computer can process a city pair in one second. (It is a really old slow computer.)

So if we use the O(n) approach we’ll take 2 minutes to come up with the answer, and the O(n22n) approach will take more than 13 minutes. So why would you use the O(n22n) program?

Watch what happens when we put more cities in place.

| n | n! | n22n | n! time | n22n time |

| 5 | 120 | 800 | 2 min | 13.3 min |

| 6 | 720 | 2,304 | 12 min | 38.4 min |

| 7 | 5,040 | 6,272 | 84 min | 104 min |

| 8 | 40,320 | 16,384 | 11.2 hours | 4.6 hours |

| 9 | 362,880 | 41,472 | 4.2 days | 0.5 days |

| 10 | 3,628,800 | 102,400 | 42 days | 1.2 days |

| 11 | 399,162,800 | 247,808 | 1.26 years | 2.87 days |

Now of course those numbers are ridiculously large, but that’s not the point. They are large because our pretend computer is impossibly slow

The real point is as the number of cities goes up the brute force approach, the n!, approach is impossible. Lets now assume our computer doesn’t take 1 second to process a city pair, but instead it can process 100 million city pairs a second. But… we’ll add more cities!

| n | n! | n22n | n! time | n22n time |

| 11 | 39,916,800 | 247,808 | 40 sec | 0 |

| 20 | huge | 419,430,400 | 77 years | 4.2 sec |

| 50 | makes 20! look small | between 20! and 50! | wow | 89 years |

What is wow? 963,764,456,159,956,000,000,000,000,000,000,000,000,000,000,000,000 years. Lets put that into perspective. Pretend that this TSP is really important to the plant. Let’s build a million computers per person on the planet. That’s still 123,559,545,661,533,000,000,000,000,000,000,000 years and the Universe is only 13,800,000,000 years old. So no matter how big you thing these numbers are, they’re bigger than that. So the TSP cannot be solved by brute force.

We know that the n! approach is the brute force approach, but what is n22n approach? Where does that come from. In 1962 Michael Held and Richard Karp found an algorithm which guaranteed it was less than O(n22n). To quote William Cook:

For anyone interested in solving large TSP instances, it is bitter news that in the forty years since Held and Karp no better guarantee has been found for the problem. (See the nice survey paper by Gerhard Woeginger.) This is disappointing since for n=30 the Held-Karp guarantee is already quite a large number, and for n=100 it is an impossibly large number to handle with today’s computing platforms.

We can see choosing the right algorithm is essential so what’s up? 89 years is still way too long. Brute force is too slow, Held-Karp is too slow.

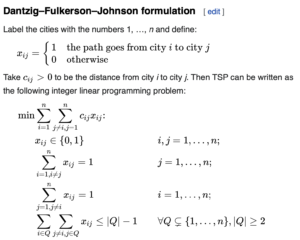

Researchers George Dantzig, Ray Fulkerson, and Selmer Johnson solved this in 1954 while employed at Rand. So what did they do? They were extremely clever. They used a technique knows as linear programming, and I’m not even going to pretend that I understand that.

So what’s the difference between these two algorithms? I certainly don’t know. I had assumed the Dantzig et al had either broken the O(n22n) barrier or their solution was usable, but not perfect. So I reached out the Computer Science experts online and asked the question on cs.stackexchange.com. Feel free to read the detailed question there, it is only for Computer Science geeks though.

Nobody had a good answer, so I’m starting to feel better already. LOL. But this tidbit came out.

Dantzig et al. uses cutting planes on top of the Simplex Method and comes with no subexponential time upper bounds that I know of but is fast in practice.

j_random_hacker

The “but fast in practice” is the interesting part. So I asked for some clarification.

Average case complexity is different from worst case complexity. E.g. interpolation search has O(logjlog𝑛) average case complexity and Θ(𝑛) worst case complexity. Meanwhile binary search has Θ(log𝑛) complexity for both average cases and worst cases.

rsu9384

Now that really got me thinking. Held-Karp and Dantzig are equally slow, except that Dantzig is fast in practice? Why would that be? I have a guess, and aside from writing a scholarly paper, which is so not for me, I’m just going to guess.

Could Dantzig et al’s Cutting Planes work because they are applied to cities? Cities are started in reasonable locations, they won’t be in worse case locations. So maybe that’s why the problem is solvable.

This blog post took weeks to write, wow, I had no idea of the journey I was starting when I went down this route. Thanks for sticking with me.